Rust ❤️ Bela – SIMD Sines

Posted

Last time I wrote about how to cross-compile code for and run it on the Bela using Rust, but there is still a lot of ground to cover. I haven't gone into any detail about what I'm even planning to do with the Bela or how to access the Bela-specific APIs. In this post, I'll go into the former, including some basic feasibility checks which will involve — you may have guessed it from the title — SIMD and sines.

As mentioned last time, the Bela is an extension board that allows you to achieve ultra-low latency while processing sensors and audio by using a customized Linux with hard real-time extensions. If you are interested in what can be done with the Bela in general, check out their blog for a bunch of creative electronic instruments, art installations, and other projects by makers around the world. In any case, I am planning two projects:

To get to know the Bela hardware, the Bela APIs, and Bela's performance characteristics, I decided a simple(ish) project that doesn't require special external hardware or other large up front investments — aside from the Bela itself — would be a good idea. Essentially something that would just as well work as a VST plugin. A virtual instrument. In this particular case: a virtual tone wheel organ. Ok, maybe not that simple.

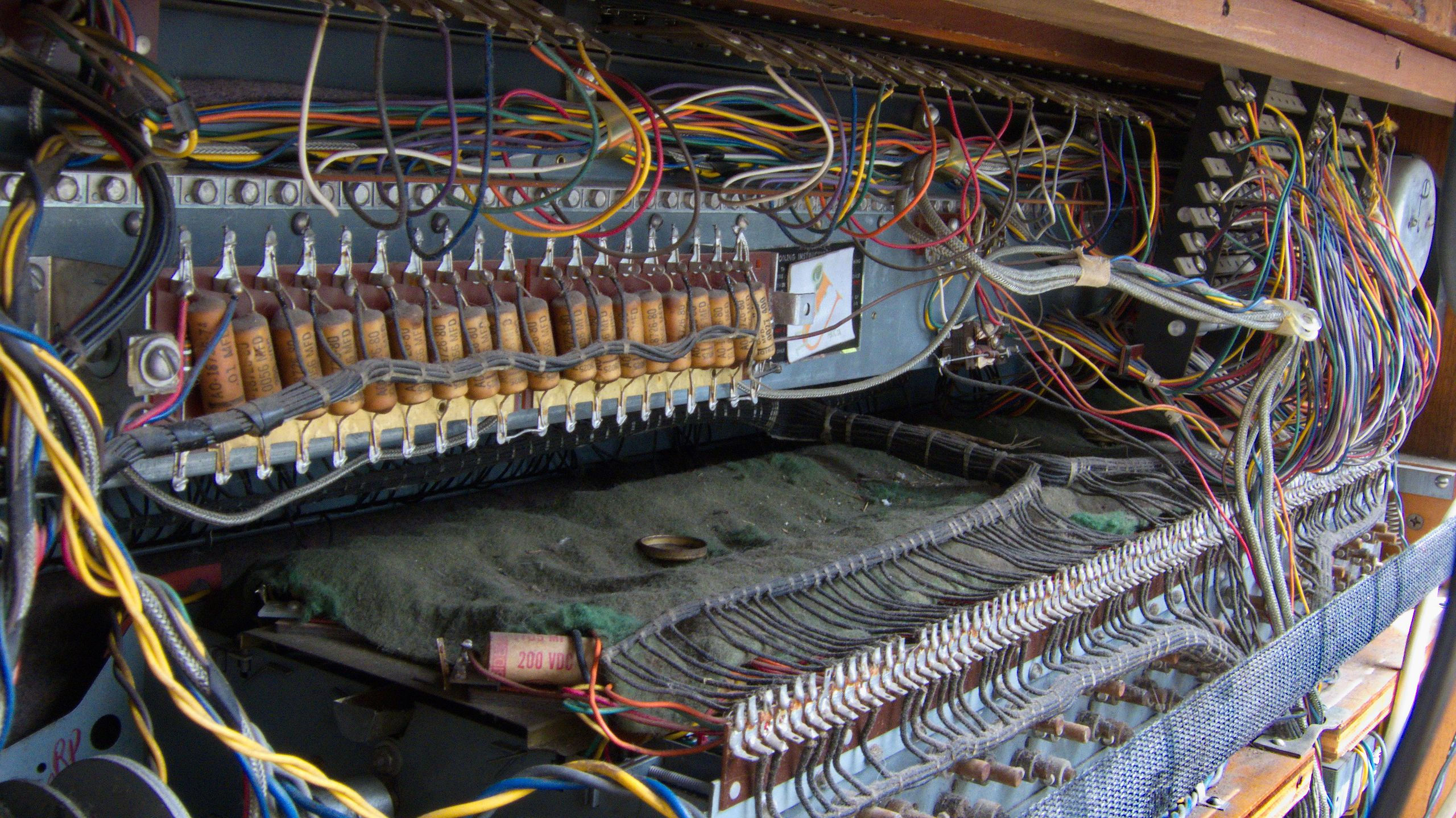

Henry Zbyszynski, CC BY 2.0 <https://creativecommons.org/licenses/by/2.0>, via Wikimedia Commons When (if?!) I finish that project, the second step is to go for something more… physical. While I wrote a number of VST plugins, mostly work “in the box,” and just wrote that I plan to create a virtual instrument, I am not an electronic musician… or even a passable keyboard player. I mostly play bass (electric and occasionally double) and some guitar.

I have been fascinated by the possibilities of polyphonic pickups for quite some time now. Therefore, project number two will be taking a kit (or cheap) guitar or bass (haven't quite decided yet) and adding a set of Cycfi Nu polyphonic pickups, and processing the polyphonic signals on the Bela. And if I want to get really fancy, I may add an XY-pad or other weird input channel. Maybe a gyro?!

Note to future self: don't let that “if” become reality, you already have the pickups! Does anyone want to fund future self a Crimson Guitars guitar building course for extra motivation? 😅

Now let's get started. So the plan is to model a classic tone wheel organ as closely as possible — based on specs available around the web — with as low latency as possible. One important thing to note about low latency and real time: that doesn't mean fast! In fact, reducing buffer sizes (and the Bela can go real small, i.e., very low latency) increases the number of context switches, using up more processing power for things that aren't actual audio processing. Also, the BeagleBone Black and its 32-bit Cortex-A8 running at 1 GHz were introduced in 2013, so it's not exactly the fastest single board computer on the market (for comparison: a current Raspberry Pi has a quad-core 64-bit Cortex-A72 running at 1.5 GHz!). While work is currently under way to get Bela working with the recently released (well, in 2019) BeagleBone AI and its dual-core Cortex-A15 running at 1.5 GHz, we'll have to wait for that a little longer.

The classic tone wheel organ (I'm pretty sure almost every musician knows which one I'm talking about) has a total of 91 approximately sinusoidal tone generators. Actually, the lowest octave has a much weirder waveform, but let's assume sinusoidal for now. Since I want to model as much of the organ's real-world weirdness, including cross-talk between tone wheels, fixed phase between certain pairs of wheels, and full polyphony, there is no real alternative to actually generating 91 sines all the time. Not even complex oscillators, which can be really high quality for very little effort, since I really hope to be able to model the phase noise due to imperfect coupling. And maybe even simulate turning off the motor for the organ equivalent of a whammy bar dive bomb. Also, if we can't generate them all the time we can't occasionally generate them in time either.

First things first, now that we know what the project will be, let's rename our hello project to bela-3. We have to rename both the folder and the package name in Cargo.toml.

For a variety of reasons, I am a big fan of using an unsigned fixed-point number to represent the current phase. When using an unsigned fixed-point number in Q0.32 format, i.e., using all 32 bits of a u32 as fractional bits, you can represent values in the open range at a constant resolution of and get wrapping for free. No need for expensive floating-point remainder calculations (when using , for f32.fract will do) or conditionals to keep the value in range. Additionally, this description works really well with power-of-two wavetables: you just use the top bits to index a -sample wavetable and use the remaining bits for interpolation. Furthermore, it is easy to determine in which quadrant of a sine/cosine we are, just by looking at the top two bits — you'll see why this is relevant soon.

Since premature optimization is the root of all evil, to quote Donald Knuth, let's first try the direct way: just rescaling our number in (well, technically in , represented by integers in ) to by multiplying it with and using the built-in f32::sin function. So let's add a new file src/sines.rs to our project:

use std::num::Wrapping;

pub fn sines_std(phasors: &[Wrapping<u32>; 91]) -> [f32; 91] {

let mut ret = [0.0; 91];

for (p, r) in phasors.iter().zip(ret.iter_mut()) {

*r = (std::f32::consts::TAU / (1u64 << 32) as f32 * p.0 as f32)

.sin();

}

ret

}

Yes, Rust supports the full circle constant . Since we want to measure how much time generating our 91 sines takes, we need to benchmark. I will be using the awesome criterion crate for the benchmarking. However, writing a benchmark requires a library crate and so far we only have a binary crate. But our recently-renamed bela_3 isn't actually a crate. It's a package that contains a binary crate. So we can simply add a library crate to our existing package that so far only contains a binary crate. In fact, doing so and placing most of the implementation inside the same package and using the library crate within the binary crate isn't uncommon. How to we do this? Just add src/lib.rs and while we're at it — introduce and use our sines module, otherwise sines.rs wouldn't be compiled.

// introduce sines privately and use it publically to avoid having to

// write bela_3::sines::XY everywhere

mod sines;

pub use sines::*;

Now we need to add the criterion crate to our [dev-dependencies] in Cargo.toml and declare our benchmark:

[dev-dependencies]

criterion = { version = "0.3", features = [ "html_reports" ] }

[[bench]]

name = "sine_benchmark"

harness = false

Finally, we define our benchmark in benches/sine_benchmark.rs:

use bela_3::*;

use criterion::{

black_box, criterion_group, criterion_main, Criterion,

};

use std::num::Wrapping;

fn criterion_benchmark(c: &mut Criterion) {

// create a set of phasors as Wrapping<u32>, since Rust u32 are

// generally not wrapping (overflow checking is only enabled in

// debug mode by default) in Rust

let phasors = {

let mut phasors = [Wrapping(0u32); 91];

let mut x = Wrapping(0u32);

for phasor in phasors.iter_mut() {

*phasor = x;

x += Wrapping(0x3fff_ffff);

}

phasors

};

// create a benchmark group as we want to compare variants of a

// particular function

let mut group = c.benchmark_group("sines");

group.bench_function("std", |b| {

b.iter(|| sines_std(black_box(&phasors)))

});

group.finish();

}

criterion_group!(benches, criterion_benchmark);

criterion_main!(benches);

Now let's run our benchmark using cargo bench --bench sine_benchmark -- --noplot, since cargo bench will also run all tests — including the auto-generated empty unit- and doctest executables — which don't support the --noplot option. And we definitely want to pass the --noplot option, as rendering the plots and corresponding HTML reports will take forever and a day on the Bela. Didn't I explicitly enable the html_reports feature in our Cargo.toml? Well, yes, but that was only to silence a deprecation warning, which pops up even if we pass --noplot.

sines/std time: [55.405 us 55.494 us 55.609 us]

Found 22 outliers among 100 measurements (22.00%)

1 (1.00%) high mild

21 (21.00%) high severe

55.5 μs? Quick back of the envelope calculation…

Leaving us with… −32.8 μs to do everything else when computing one output sample. Well, that's no good. I think we would have to violate causality to get this version to work, so let's optimize a little. Since we don't need our sines to be precise within a few ULPS, those tone wheels aren't perfect either, a variety of approximations are possible. Wavetables come to mind, however those don't vectorize too well (gather/scatter operations for indexing/indirection are only available in some high-end instruction sets) and memory clocks haven't been increasing as quickly as CPU clocks for many years. The latter isn't too bad if the tables stay in L1 cache, but since we want to keep latency low — incurring many context switches — frequent cache evictions are very likely. So instead I chose a simple, cubic approximation within the quadrant, with the top two bits of our phase being used to flip the polynomial / change the sign. I'm using the same approach as in this article, except that I only have a fixed-point phase, not a fixed-point result.

fn sin_quadrant_cubic(phasor: Wrapping<u32>) -> f32 {

let x = phasor.0;

// set q13 to u32::MAX if we are in the second or fourth quadrant,

// otherwise set it to 0, using one shift to move the corresponding

// bit to the most significant bit and a sign-extending shift to

// set the other bits

let q13 = (x.wrapping_shl(1) as i32 >> 31) as u32;

// flip xm, depending on the quadrant we're in and discard the sign

// bit which we'll be adding back later

let xm = ((x.wrapping_neg() & q13) | (x & !q13)) & 0x7fff_ffff;

// convert our Q1.30 value (we discarded the topmost bit) to an f32

// in [0, 1]

let xmf = xm as f32 / 0x4000_0000 as f32;

// use the top bit of the input phasor as the sign of our cubic

// polynomial approximation

let sign = f32::from_bits(x);

f32::copysign(1.5 * xmf - 0.5 * xmf.powi(3), sign)

}

pub fn sines_quadrant_cubic(

phasors: &[Wrapping<u32>; 91],

) -> [f32; 91] {

let mut ret = [0.0; 91];

for (p, r) in phasors.iter().zip(ret.iter_mut()) {

*r = sin_quadrant_cubic(*p);

}

ret

}

While other cubic approximations have lower error, this lower error comes at the cost of discontinuities at quadrant edges, creating some very unpleasant harmonics and aliasing. So in this case, the absolute error in value may be larger, but the (perceived) harmonic error is much smaller. So then we can add a benchmark… “Wait a second,” I hear you scream at your monitor “, aren't you about to skip a critical step there?” And as a matter of fact, I was! While this isn't premature optimization, as the naïve version is definitely too slow, we shouldn't start looking at performance before checking if all that bit twiddling is even correct! There's a good reason that cargo bench also runs all tests. So let's take care of that oversight and add some tests, using Rust's wonderful built-in testing features (and the approx crate for approximate floating-point assertions, which I added to my [dev-dependencies]), by adding the following to src/sines.rs:

#[cfg(test)]

mod tests {

use approx::assert_abs_diff_eq;

use std::num::Wrapping;

fn reference_phasors() -> [Wrapping<u32>; 91] {

let scale = (1u64 << 32) as f64;

let scale = |f| (scale * f) as u32;

let mut phasors = [Wrapping(0); 91];

phasors[0] = Wrapping(scale(0.0)); // 0

phasors[1] = Wrapping(scale(0.125)); // τ/8

phasors[2] = Wrapping(scale(0.25)); // τ/4

phasors[3] = Wrapping(scale(0.375)); // 3τ/8

phasors[4] = Wrapping(scale(0.5)); // τ/2 = π

phasors[5] = Wrapping(scale(0.625)); // 5τ/8

phasors[6] = Wrapping(scale(0.75)); // 3τ/4

phasors[7] = Wrapping(scale(0.875)); // 7τ/8

phasors[8] = Wrapping(u32::MAX); // ~τ

phasors

}

fn check_reference_results(sines: &[f32; 91]) {

use std::f32::consts::TAU;

// As we are looking at approximations of sines, allow for an

// error of 0.02

assert_abs_diff_eq!(

sines[0],

f32::sin(TAU * 0.0),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[1],

f32::sin(TAU * 0.125),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[2],

f32::sin(TAU * 0.25),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[3],

f32::sin(TAU * 0.375),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[4],

f32::sin(TAU * 0.5),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[5],

f32::sin(TAU * 0.625),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[6],

f32::sin(TAU * 0.75),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[7],

f32::sin(TAU * 0.875),

epsilon = 0.02

);

assert_abs_diff_eq!(

sines[8],

f32::sin(TAU * 1.0),

epsilon = 0.02

);

}

#[test]

fn test_sines_std() {

use crate::sines_std;

let phasors = reference_phasors();

let sines = sines_std(&phasors);

check_reference_results(&sines);

}

#[test]

fn test_quadrant_cubic() {

use crate::sines_quadrant_cubic;

let phasors = reference_phasors();

let sines = sines_quadrant_cubic(&phasors);

check_reference_results(&sines);

}

}

Drumroll (and cargo test), please… Two tests passed! Awesome, but always better to be safe than sorry, especially with bit-twiddling hacks like these. And truth be told, there was a mistake in an earlier draft: I discarded the top two bits (i.e., the quadrant) before the wrapping negation, while I should have dropped only the topmost bit after wrapping negation, so xmf wasn't in anymore. Having made sure that everything works, we can add an additional benchmark (between our current one and group.finish()) to sine_benchmarks.rs.

group.bench_function("quadrant_cubic", |b| {

b.iter(|| sines_quadrant_cubic(black_box(&phasors)))

});

And run it.

sines/quadrant_cubic time: [8.1137 us 8.1412 us 8.1934 us]

Found 9 outliers among 100 measurements (9.00%)

2 (2.00%) low mild

2 (2.00%) high mild

5 (5.00%) high severe

Much better, but still more than a third of our time budget. How do we find out if the code the compiler produces is anywhere near optimal? Or if it uses the NEON instruction set we enabled at the end of the last post? The most direct way is by looking at the assembly output, which we can get at using objdump, or in this case, as we're looking at a cross-compiled executable, our cross-compiler's objdump:

arm-linux-gnueabihf-objdump -dr target\armv7-unknown-linux-gnueabihf\release\deps\sine_benchmark-46d3108f9d479ee1 > disassembly.asm

The exact name of the executable, in particular the hash at the end, will vary, so make sure to dump the executable listed while running cargo bench. If you're on Linux or Mac, you'll have to use forward slashes too. In either case, though, make absolutely sure to pipe the output to a file, as it can get very, very large. Then search in the output file for the name of our function sines_quadrant_cubic. There will be additional stuff at the beginning and the end of the symbol's name due to name mangling.

001b8618 <_ZN6bela_35sines20sines_quadrant_cubic17hf39864046bb5dcf6E>:

1b8618: e92d4070 push {r4, r5, r6, lr}

1b861c: e1a04001 mov r4, r1

1b8620: e3a01000 mov r1, #0

1b8624: e3a02f5b mov r2, #364 ; 0x16c

1b8628: e1a05000 mov r5, r0

1b862c: e3a06000 mov r6, #0

1b8630: ebf94991 bl ac7c <memset@plt>

1b8634: eeb71a08 vmov.f32 s2, #120 ; 0x3fc00000 1.5

1b8638: ed9f0a16 vldr s0, [pc, #88] ; 1b8698 <_ZN6bela_35sines20sines_quadrant_cubic17hf39864046bb5dcf6E+0x80>

1b863c: eebe2a00 vmov.f32 s4, #224 ; 0xbf000000 -0.5

1b8640: f3c00610 vmov.i32 d16, #-2147483648 ; 0x80000000

1b8644: e7940006 ldr r0, [r4, r6]

1b8648: f26011b0 vorr d17, d16, d16

1b864c: e3100101 tst r0, #1073741824 ; 0x40000000

1b8650: ee030a10 vmov s6, r0

1b8654: 12600000 rsbne r0, r0, #0

1b8658: e3c00102 bic r0, r0, #-2147483648 ; 0x80000000

1b865c: ee040a10 vmov s8, r0

1b8660: e0850006 add r0, r5, r6

1b8664: e2866004 add r6, r6, #4

1b8668: eeb84a44 vcvt.f32.u32 s8, s8

1b866c: e3560f5b cmp r6, #364 ; 0x16c

1b8670: ee244a00 vmul.f32 s8, s8, s0

1b8674: ee245a04 vmul.f32 s10, s8, s8

1b8678: ee245a05 vmul.f32 s10, s8, s10

1b867c: ee244a01 vmul.f32 s8, s8, s2

1b8680: ee255a02 vmul.f32 s10, s10, s4

1b8684: ee344a05 vadd.f32 s8, s8, s10

1b8688: f3531114 vbsl d17, d3, d4

1b868c: f4c0183f vst1.32 {d17[0]}, [r0 :32]

1b8690: 1affffeb bne 1b8644 <_ZN6bela_35sines20sines_quadrant_cubic17hf39864046bb5dcf6E+0x2c>

1b8694: e8bd8070 pop {r4, r5, r6, pc}

1b8698: 30800000 .word 0x30800000

Looks ok, aside from that annoying memset which the compiler really should be able to avoid, but it seems no auto-vectorization was performed (s6, s8, etc. are single-element registers). Just for fun, let's see what happens when running it natively on our x86_64 desktop machine.

sines/std time: [446.49 ns 447.54 ns 448.68 ns]

Found 6 outliers among 100 measurements (6.00%)

4 (4.00%) high mild

2 (2.00%) high severe

sines/quadrant_cubic time: [98.704 ns 99.057 ns 99.386 ns]

Found 10 outliers among 100 measurements (10.00%)

10 (10.00%) high mild

That is… a bit faster. But while my desktop can perform the DSP much more quickly, there is absolutely no chance to get the buffer size down to 2 samples (the lowest setting is 32 samples on my RME HDSPe AIO, which is a high-quality professional audio interface), which the Bela can, due to its RTOS kernel. And the code got auto-vectorized too, as evidenced by the mulps, addps, and other packed SSE2 instructions (you can also see the three final samples being computed using scalar instructions, as 91 isn't divisible by four — the pointless memset remains, however):

00000000001e7890 <_ZN6bela_35sines20sines_quadrant_cubic17hf4a7fc3f0238a853E>:

1e7890: 41 57 push %r15

1e7892: 41 56 push %r14

1e7894: 53 push %rbx

1e7895: 49 89 f7 mov %rsi,%r15

1e7898: 49 89 fe mov %rdi,%r14

1e789b: 31 db xor %ebx,%ebx

1e789d: ba 6c 01 00 00 mov $0x16c,%edx

1e78a2: 31 f6 xor %esi,%esi

1e78a4: ff 15 06 03 0e 00 callq *0xe0306(%rip) # 2c7bb0 <memset@GLIBC_2.2.5>

1e78aa: 66 0f 6f 05 9e de 04 movdqa 0x4de9e(%rip),%xmm0 # 235750 <anon.be0cd5bf402d6cd1684266fd1b25d153.1.llvm.9828885523271912942+0xa3>

1e78b1: 00

1e78b2: 0f 28 0d f7 b0 06 00 movaps 0x6b0f7(%rip),%xmm1 # 2529b0 <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x119>

1e78b9: 0f 28 15 00 b1 06 00 movaps 0x6b100(%rip),%xmm2 # 2529c0 <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x129>

1e78c0: 0f 28 1d 09 b1 06 00 movaps 0x6b109(%rip),%xmm3 # 2529d0 <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x139>

1e78c7: 66 0f 6f 25 11 b1 06 movdqa 0x6b111(%rip),%xmm4 # 2529e0 <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x149>

1e78ce: 00

1e78cf: 90 nop

1e78d0: f3 41 0f 6f 2c 9f movdqu (%r15,%rbx,4),%xmm5

1e78d6: 66 0f 6f f5 movdqa %xmm5,%xmm6

1e78da: 66 0f 72 f6 01 pslld $0x1,%xmm6

1e78df: 66 0f 72 e6 1f psrad $0x1f,%xmm6

1e78e4: 66 0f 6f fd movdqa %xmm5,%xmm7

1e78e8: 66 0f ef fe pxor %xmm6,%xmm7

1e78ec: 66 0f fa fe psubd %xmm6,%xmm7

1e78f0: 66 0f db f8 pand %xmm0,%xmm7

1e78f4: 0f 5b f7 cvtdq2ps %xmm7,%xmm6

1e78f7: 0f 59 f1 mulps %xmm1,%xmm6

1e78fa: 0f 28 fe movaps %xmm6,%xmm7

1e78fd: 0f 59 fe mulps %xmm6,%xmm7

1e7900: 0f 59 fe mulps %xmm6,%xmm7

1e7903: 0f 59 f2 mulps %xmm2,%xmm6

1e7906: 0f 59 fb mulps %xmm3,%xmm7

1e7909: 0f 58 fe addps %xmm6,%xmm7

1e790c: 0f 54 f8 andps %xmm0,%xmm7

1e790f: 66 0f db ec pand %xmm4,%xmm5

1e7913: 66 0f eb ef por %xmm7,%xmm5

1e7917: f3 41 0f 7f 2c 9e movdqu %xmm5,(%r14,%rbx,4)

1e791d: 48 83 c3 04 add $0x4,%rbx

1e7921: 48 83 fb 58 cmp $0x58,%rbx

1e7925: 75 a9 jne 1e78d0 <_ZN6bela_35sines20sines_quadrant_cubic17hf4a7fc3f0238a853E+0x40>

1e7927: 41 8b 87 60 01 00 00 mov 0x160(%r15),%eax

1e792e: 89 c1 mov %eax,%ecx

1e7930: f7 d9 neg %ecx

1e7932: a9 00 00 00 40 test $0x40000000,%eax

1e7937: 0f 44 c8 cmove %eax,%ecx

1e793a: 81 e1 ff ff ff 7f and $0x7fffffff,%ecx

1e7940: 0f 57 db xorps %xmm3,%xmm3

1e7943: f3 0f 2a d9 cvtsi2ss %ecx,%xmm3

1e7947: f3 0f 10 15 a9 b0 06 movss 0x6b0a9(%rip),%xmm2 # 2529f8 <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x161>

1e794e: 00

1e794f: f3 0f 59 da mulss %xmm2,%xmm3

1e7953: 66 0f 6e e0 movd %eax,%xmm4

1e7957: f3 0f 10 0d 9d b0 06 movss 0x6b09d(%rip),%xmm1 # 2529fc <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x165>

1e795e: 00

1e795f: 0f 28 eb movaps %xmm3,%xmm5

1e7962: f3 0f 59 eb mulss %xmm3,%xmm5

1e7966: f3 0f 59 eb mulss %xmm3,%xmm5

1e796a: 0f 28 f3 movaps %xmm3,%xmm6

1e796d: f3 0f 59 f1 mulss %xmm1,%xmm6

1e7971: f3 0f 10 1d 87 b0 06 movss 0x6b087(%rip),%xmm3 # 252a00 <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x169>

1e7978: 00

1e7979: f3 0f 59 eb mulss %xmm3,%xmm5

1e797d: f3 0f 5c f5 subss %xmm5,%xmm6

1e7981: 0f 54 f0 andps %xmm0,%xmm6

1e7984: 66 0f db 25 54 b0 06 pand 0x6b054(%rip),%xmm4 # 2529e0 <anon.eab9b9e9888d57a198cd6903a1003a27.17.llvm.10396227554844612960+0x149>

1e798b: 00

1e798c: 66 0f eb e6 por %xmm6,%xmm4

1e7990: 41 8b 87 64 01 00 00 mov 0x164(%r15),%eax

1e7997: 89 c1 mov %eax,%ecx

1e7999: f7 d9 neg %ecx

1e799b: a9 00 00 00 40 test $0x40000000,%eax

1e79a0: 0f 44 c8 cmove %eax,%ecx

1e79a3: 81 e1 ff ff ff 7f and $0x7fffffff,%ecx

1e79a9: 0f 57 ed xorps %xmm5,%xmm5

1e79ac: f3 0f 2a e9 cvtsi2ss %ecx,%xmm5

1e79b0: 66 41 0f 7e a6 60 01 movd %xmm4,0x160(%r14)

1e79b7: 00 00

1e79b9: f3 0f 59 ea mulss %xmm2,%xmm5

1e79bd: 66 0f 6e e0 movd %eax,%xmm4

1e79c1: 0f 28 f5 movaps %xmm5,%xmm6

1e79c4: f3 0f 59 f5 mulss %xmm5,%xmm6

1e79c8: f3 0f 59 f5 mulss %xmm5,%xmm6

1e79cc: f3 0f 59 e9 mulss %xmm1,%xmm5

1e79d0: f3 0f 59 f3 mulss %xmm3,%xmm6

1e79d4: f3 0f 5c ee subss %xmm6,%xmm5

1e79d8: 0f 54 e8 andps %xmm0,%xmm5

1e79db: 66 0f 6f f0 movdqa %xmm0,%xmm6

1e79df: 66 0f df f4 pandn %xmm4,%xmm6

1e79e3: 66 0f eb f5 por %xmm5,%xmm6

1e79e7: 41 8b 87 68 01 00 00 mov 0x168(%r15),%eax

1e79ee: 89 c1 mov %eax,%ecx

1e79f0: f7 d9 neg %ecx

1e79f2: a9 00 00 00 40 test $0x40000000,%eax

1e79f7: 0f 44 c8 cmove %eax,%ecx

1e79fa: 81 e1 ff ff ff 7f and $0x7fffffff,%ecx

1e7a00: 0f 57 e4 xorps %xmm4,%xmm4

1e7a03: f3 0f 2a e1 cvtsi2ss %ecx,%xmm4

1e7a07: 66 41 0f 7e b6 64 01 movd %xmm6,0x164(%r14)

1e7a0e: 00 00

1e7a10: f3 0f 59 e2 mulss %xmm2,%xmm4

1e7a14: 66 0f 6e d0 movd %eax,%xmm2

1e7a18: f3 0f 59 cc mulss %xmm4,%xmm1

1e7a1c: 0f 28 ec movaps %xmm4,%xmm5

1e7a1f: f3 0f 59 ec mulss %xmm4,%xmm5

1e7a23: f3 0f 59 ec mulss %xmm4,%xmm5

1e7a27: f3 0f 59 eb mulss %xmm3,%xmm5

1e7a2b: f3 0f 5c cd subss %xmm5,%xmm1

1e7a2f: 0f 54 c8 andps %xmm0,%xmm1

1e7a32: 66 0f df c2 pandn %xmm2,%xmm0

1e7a36: 66 0f eb c1 por %xmm1,%xmm0

1e7a3a: 66 41 0f 7e 86 68 01 movd %xmm0,0x168(%r14)

1e7a41: 00 00

1e7a43: 4c 89 f0 mov %r14,%rax

1e7a46: 5b pop %rbx

1e7a47: 41 5e pop %r14

1e7a49: 41 5f pop %r15

1e7a4b: c3 retq

1e7a4c: 0f 1f 40 00 nopl 0x0(%rax)

So the lack of automatic vectorization is not due to our code not being properly vectorizable, but more likely due to a lack of proper cost heuristics for NEON-instructions in the LLVM compiler backend (this is an assumption, if you have better information, feel free to contact me!).

Since the compiler's auto-vectorization won't help us, is there anything we can do? Not on stable Rust, as the NEON intrinsics under core::arch::arm are gated behind the nightly-only experimental feature stdsimd. I guess we'll have to brave that dark, left-hand path that is nightly Rust. So first up, let's install nightly Rust!

rustup toolchain install nightly

rustup target add armv7-unknown-linux-gnueabihf --toolchain nightly

You may want to/have to add --allow-downgrade and define a desired --profile and required --components to the toolchain install, as described in the documentation. To use the nightly tool chain, you can pass +nightly as a first argument to all your cargo calls or — and this is the approach I prefer — set a folder override. So let's navigate to our package folder and set an override:

rustup override set nightly

At this point, there are two possible approaches to achieving explicit SIMD acceleration:

- Target-specific intrinsics

- Rusts' (work-in-progress) portable SIMD API

portable-simd portable-simd's predecessor,packed_simd

In the hope of being able to write my code in such a way that it works with a variety of platforms, I decided to try the second option, but quickly found out that many necessary features aren't available yet, so ended up going with the third option, which seems to be more complete at the time of writing. To use packed_simd, add

[dependencies]

packed_simd = { version = "0.3.6", package = "packed_simd_2", features = [ "into_bits" ] }

to your Cargo.toml. Now we can fairly directly translate the (fixed) sin_quadrant_cubic function to a SIMD version and add an explicitly split loop version of sines_quadrant_cubic:

use packed_simd::*;

fn sin_quadrant_cubic_x4(phasor: u32x4) -> f32x4 {

let x = phasor;

// no explicitly wrapping operations on u32x4, so we use a regular

// left shift

let q13 = u32x4::from_cast(i32x4::from_cast(x << 1) >> 31);

// no wrapping negation on u32x4, so cast to i32x4 for negation

let xm = ((u32x4::from_cast(-i32x4::from_cast(x)) & q13)

| (x & !q13))

& 0x7fff_ffff;

// reciprocal multiplication is exact for powers of two

let xmf = f32x4::from_cast(xm) * (0x4000_0000 as f32).recip();

// no f32x4::powi

let ret = 1.5 * xmf - 0.5 * (xmf * xmf * xmf);

// no f32x4::copysign (one of the few things portable-simd has)

((u32x4::from_bits(ret) & 0x7fff_ffff) | (x & 0x8000_0000))

.into_bits()

}

pub fn sines_quadrant_cubic_simd(

phasors: &[Wrapping<u32>; 91],

) -> [f32; 91] {

let mut ret = [0.0; 91];

let mut phasor_chunks = phasors.chunks_exact(4);

let mut ret_chunks = ret.chunks_exact_mut(4);

for (p, r) in (&mut phasor_chunks).zip(&mut ret_chunks) {

// transmute p from a slice of Wrapping<u32> to a slice of u32.

// this is safe, as Wrapping is repr(transparent)

use std::mem::transmute;

let p = unsafe { transmute::<_, &[u32]>(p) };

let p = u32x4::from_slice_unaligned(p);

sin_quadrant_cubic_x4(p).write_to_slice_unaligned(r);

}

for (p, r) in phasor_chunks

.remainder()

.iter()

.zip(ret_chunks.into_remainder().iter_mut())

{

*r = sin_quadrant_cubic(*p);

}

ret

}

After adding a corresponding benchmark (and test!), we can evaluate our SIMD-optimized function.

sines/cubic_simd time: [1.7692 us 1.7733 us 1.7796 us]

Found 7 outliers among 100 measurements (7.00%)

1 (1.00%) low severe

3 (3.00%) low mild

3 (3.00%) high severe

Somewhat surprisingly, the SIMD version is more than 4× faster than the scalar version. For our (already auto-vectorized) x86_64-version, the performance is unsurprisingly effectively identical to the basic sines/quadrant_cubic. If you are interested in how the ARM/NEON assembly looks like, here goes:

001b723c <_ZN6bela_35sines25sines_quadrant_cubic_simd17h963bbd989285d3cdE>:

1b723c: e92d4070 push {r4, r5, r6, lr}

1b7240: e1a05001 mov r5, r1

1b7244: e3a01000 mov r1, #0

1b7248: e3a02f5b mov r2, #364 ; 0x16c

1b724c: e1a04000 mov r4, r0

1b7250: e3a06000 mov r6, #0

1b7254: ebf94e7c bl ac4c <memset@plt>

1b7258: e3a005c2 mov r0, #813694976 ; 0x30800000

1b725c: f2c40650 vmov.i32 q8, #1073741824 ; 0x40000000

1b7260: f2c74f58 vmov.f32 q10, #1.5 ; 0x3fc00000

1b7264: eea20b90 vdup.32 q9, r0

1b7268: f3c3665f vmov.i32 q11, #-1090519040 ; 0xbf000000

1b726c: f3c08670 vmvn.i32 q12, #-2147483648 ; 0x80000000

1b7270: e0850006 add r0, r5, r6

1b7274: e3560e15 cmp r6, #336 ; 0x150

1b7278: f460aa8f vld1.32 {d26-d27}, [r0]

1b727c: e0840006 add r0, r4, r6

1b7280: f26ac8f0 vtst.32 q14, q13, q8

1b7284: f3f9e3ea vneg.s32 q15, q13

1b7288: f35ec1fa vbsl q14, q15, q13

1b728c: f3c0c770 vbic.i32 q14, #-2147483648 ; 0x80000000

1b7290: f3fbc6ec vcvt.f32.u32 q14, q14

1b7294: f34ccdf2 vmul.f32 q14, q14, q9

1b7298: f34cedfc vmul.f32 q15, q14, q14

1b729c: f34cedfe vmul.f32 q15, q14, q15

1b72a0: f34ccdf4 vmul.f32 q14, q14, q10

1b72a4: f34eedf6 vmul.f32 q15, q15, q11

1b72a8: f24ccdee vadd.f32 q14, q14, q15

1b72ac: f36ca1f8 vbit q13, q14, q12

1b72b0: f440aa8f vst1.32 {d26-d27}, [r0]

1b72b4: 0a000001 beq 1b72c0 <_ZN6bela_35sines25sines_quadrant_cubic_simd17h963bbd989285d3cdE+0x84>

1b72b8: e2866010 add r6, r6, #16

1b72bc: eaffffeb b 1b7270 <_ZN6bela_35sines25sines_quadrant_cubic_simd17h963bbd989285d3cdE+0x34>

1b72c0: e5952168 ldr r2, [r5, #360] ; 0x168

1b72c4: eeb76a08 vmov.f32 s12, #120 ; 0x3fc00000 1.5

1b72c8: e5956160 ldr r6, [r5, #352] ; 0x160

1b72cc: eeb67a00 vmov.f32 s14, #96 ; 0x3f000000 0.5

1b72d0: e3120101 tst r2, #1073741824 ; 0x40000000

1b72d4: e5950164 ldr r0, [r5, #356] ; 0x164

1b72d8: e1a03002 mov r3, r2

1b72dc: ed9f3a31 vldr s6, [pc, #196] ; 1b73a8 <_ZN6bela_35sines25sines_quadrant_cubic_simd17h963bbd989285d3cdE+0x16c>

1b72e0: 12633000 rsbne r3, r3, #0

1b72e4: e3160101 tst r6, #1073741824 ; 0x40000000

1b72e8: e1a01006 mov r1, r6

1b72ec: e3c33102 bic r3, r3, #-2147483648 ; 0x80000000

1b72f0: 12611000 rsbne r1, r1, #0

1b72f4: e3100101 tst r0, #1073741824 ; 0x40000000

1b72f8: e3c11102 bic r1, r1, #-2147483648 ; 0x80000000

1b72fc: ee013a10 vmov s2, r3

1b7300: f3c00610 vmov.i32 d16, #-2147483648 ; 0x80000000

1b7304: ee001a10 vmov s0, r1

1b7308: e1a01000 mov r1, r0

1b730c: 12611000 rsbne r1, r1, #0

1b7310: eeb81a41 vcvt.f32.u32 s2, s2

1b7314: e3c11102 bic r1, r1, #-2147483648 ; 0x80000000

1b7318: eeb80a40 vcvt.f32.u32 s0, s0

1b731c: f26011b0 vorr d17, d16, d16

1b7320: ee021a10 vmov s4, r1

1b7324: f26021b0 vorr d18, d16, d16

1b7328: eeb82a42 vcvt.f32.u32 s4, s4

1b732c: ee211a03 vmul.f32 s2, s2, s6

1b7330: ee200a03 vmul.f32 s0, s0, s6

1b7334: ee222a03 vmul.f32 s4, s4, s6

1b7338: ee214a01 vmul.f32 s8, s2, s2

1b733c: ee203a00 vmul.f32 s6, s0, s0

1b7340: ee225a02 vmul.f32 s10, s4, s4

1b7344: ee214a04 vmul.f32 s8, s2, s8

1b7348: ee203a03 vmul.f32 s6, s0, s6

1b734c: ee200a06 vmul.f32 s0, s0, s12

1b7350: ee211a06 vmul.f32 s2, s2, s12

1b7354: ee225a05 vmul.f32 s10, s4, s10

1b7358: ee222a06 vmul.f32 s4, s4, s12

1b735c: ee244a07 vmul.f32 s8, s8, s14

1b7360: ee233a07 vmul.f32 s6, s6, s14

1b7364: ee062a10 vmov s12, r2

1b7368: ee255a07 vmul.f32 s10, s10, s14

1b736c: ee076a10 vmov s14, r6

1b7370: ee311a44 vsub.f32 s2, s2, s8

1b7374: ee300a43 vsub.f32 s0, s0, s6

1b7378: f3571110 vbsl d17, d7, d0

1b737c: ee030a10 vmov s6, r0

1b7380: e2840e16 add r0, r4, #352 ; 0x160

1b7384: f3562111 vbsl d18, d6, d1

1b7388: ee322a45 vsub.f32 s4, s4, s10

1b738c: f3530112 vbsl d16, d3, d2

1b7390: f4c0183f vst1.32 {d17[0]}, [r0 :32]

1b7394: e2840f59 add r0, r4, #356 ; 0x164

1b7398: f4c0083f vst1.32 {d16[0]}, [r0 :32]

1b739c: e2840f5a add r0, r4, #360 ; 0x168

1b73a0: f4c0283f vst1.32 {d18[0]}, [r0 :32]

1b73a4: e8bd8070 pop {r4, r5, r6, pc}

1b73a8: 30800000 .word 0x30800000

This looks pretty good. No excess bounds checking, the only real annoyance being the pointless memset call at 001b7254 we just can't seem to get rid of. But that's also a lot of duplicate code for the last three scalar values. And both issues can easily be fixed! The former by passing in an existing mutable array reference. If you are a frequent Rust user, you may think of using an array of MaybeUninit<f32x4>, but the final array_assume_init results in an even more expensive memcpy. Besides, that would involve unsafe code, and a &mut as out-reference — while not exactly idiomatic Rust — works fine and is very simple. (Note to self: check out the uninit crate) The latter can be solved by rounding up the number of sines from 91 to 92 and discarding all the complicated chunks_exact(_mut) stuff:

pub fn sines_full_simd(phasors: &[u32x4; 23], ret: &mut [f32x4; 23]) {

for (p, r) in phasors.iter().zip(ret.iter_mut()) {

*r = sin_quadrant_cubic_x4(*p);

}

}

Now let's benchmark what the effect of doing slightly more work to make everything more efficient has:

sines/full_simd time: [1.1176 us 1.1183 us 1.1192 us]

Found 4 outliers among 100 measurements (4.00%)

4 (4.00%) high severe

Nice! And the generated assembly has become a lot neater too:

001b880c <_ZN6bela_35sines15sines_full_simd17he84230b156fb0d27E>:

1b880c: e3a035c2 mov r3, #813694976 ; 0x30800000

1b8810: f2c40650 vmov.i32 q8, #1073741824 ; 0x40000000

1b8814: f2c74f58 vmov.f32 q10, #1.5 ; 0x3fc00000

1b8818: eea23b90 vdup.32 q9, r3

1b881c: f3c3665f vmov.i32 q11, #-1090519040 ; 0xbf000000

1b8820: e3a02000 mov r2, #0

1b8824: f3c08670 vmvn.i32 q12, #-2147483648 ; 0x80000000

1b8828: e0803002 add r3, r0, r2

1b882c: f463aacf vld1.64 {d26-d27}, [r3]

1b8830: e0813002 add r3, r1, r2

1b8834: f26ac8f0 vtst.32 q14, q13, q8

1b8838: e2822010 add r2, r2, #16

1b883c: f3f9e3ea vneg.s32 q15, q13

1b8840: e3520e17 cmp r2, #368 ; 0x170

1b8844: f35ec1fa vbsl q14, q15, q13

1b8848: f3c0c770 vbic.i32 q14, #-2147483648 ; 0x80000000

1b884c: f3fbc6ec vcvt.f32.u32 q14, q14

1b8850: f34ccdf2 vmul.f32 q14, q14, q9

1b8854: f34cedfc vmul.f32 q15, q14, q14

1b8858: f34cedfe vmul.f32 q15, q14, q15

1b885c: f34ccdf4 vmul.f32 q14, q14, q10

1b8860: f34eedf6 vmul.f32 q15, q15, q11

1b8864: f24ccdee vadd.f32 q14, q14, q15

1b8868: f36ca1f8 vbit q13, q14, q12

1b886c: f443aacf vst1.64 {d26-d27}, [r3]

1b8870: 1affffec bne 1b8828 <_ZN6bela_35sines15sines_full_simd17he84230b156fb0d27E+0x1c>

1b8874: e12fff1e bx lr

And on our desktop machine, this code is faster than the auto-vectorized code as well! Due both to the simpler generated code and the use of aligned loads/stores instead of unaligned loads/stores.

sines/full_simd time: [42.771 ns 42.886 ns 42.999 ns]

Found 2 outliers among 100 measurements (2.00%)

2 (2.00%) high mild

At this point, there is very little we can do to increase performance of our basic tone generation further and this post is already very long, so I'll leave the FFI for the Bela C API for the next post. Until then, feel free to follow me and send me a DM on Mastodon if you have any questions or comments. I'm also active on the Bela forum.